During 2022-2023, discussions about whether neural networks are able to replace human creative work will not subside. We’re still in the early stages of developing this technology, but we’re already seeing some amazing results.

An unexpected area in which artificial intelligence has shown considerable competence is the creation of anime.

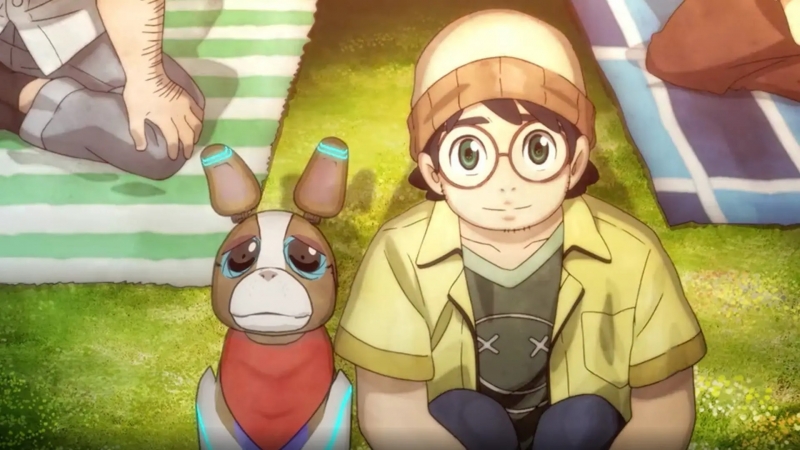

The Dog and The Boy

The short film The Dog and The Boy from Netflix is the first high-profile case of using AI to create anime. Note that during the creation of this tape, the actual use of neural networks was quite small, because artificial intelligence was involved only for drawing background images. And even these generated illustrations were then transferred to professional multipliers for finalization.

It was not without its share of controversy. Netflix Japan has made a public statement that they had to use neural network tools during the creation of this tape due to the lack of multipliers. Not everyone believed in this explanation. At the same time, rumors began to spread on the Internet that the company simply does not want to hire animation specialists and thus saves money. The multipliers themselves joined the criticism.

ROCK, PAPER, SCISSORS

The anime called Rock, Paper, Scissors is an experimental project from the Corridor studio, in which neural networks were used to process real footage. The creators of this short are not animators, the Corridor Crew is actually a team of VFX artists and enthusiasts who create educational and entertaining content on YouTube.

They even made a separate video about the process of working on Rock, Paper, Scissors. There are interesting insights into how AI can be used to create visual effects.

Despite the fact that Rock, Paper, Scissors does not position itself as a serious artistic tape, many creative people did not like this anime. Viewers complained about the presence of visual “artifacts” (flaws), similar to the result of rotoscoping – a method that allows you to “scan” real video footage and superimpose animation on top of the captured frames. Some of the flaws include jumping shadows, unnatural character movements, texture blending, and more. In addition, cartoonists began to express anxiety and dissatisfaction towards the Corridor studio, to which the creators even had to respond publicly.

Y’all didn’t “democratize” shit y’all are just lazy thieves spitting on an entire art form, fuck you https://t.co/hao5Od1RyQ

— Samuel Deats ???? (@SamuelDeats) February 28, 2023

Evolution of neural networks

We are already somewhat used to the fact that the work of neural networks is based purely on given prompts: you write a few lines of code that describe the desired result, and AI generates an image based on this request. This is currently one of the most common methods of working with neural network tools. However, this approach will not be effective when working with moving images.

To create a multiplication, you have to add the diffusion method. In this case, the neural network also works according to the following algorithm: first, it blurs the existing image, adding noise to it, and then performs the reverse process: redraws the details to the distorted version of the image. Thanks to this, a realistic or, on the contrary, a primitive frame can be easily transformed into a more or less stylistically perfected drawing.

Applying this very method to moving images, the Corridor team encountered a problem: the neural network refines each frame in a separate style, as a result of which the video sequence becomes chaotic. In part, such difficulties were overcome thanks to the use of filters in video editing programs. Having realistic frames that perform a referral function also improves the smoothness of the animation.

Where you can have fun

There are several neural network services that allow you to try the possibilities of AI in working with video content right now. However, getting access to these features is not always easy, the technology is still not in a state to be effective for the general public. Here are some popular neural network services that allow you to create a video series:

Chat GPT-4 has new capabilities for working with video content. You need access to Chat GPT+ to be able to experiment. If you already have a Chat GPT+ subscription, you can access the Chat GPT-4 page via the OpenAI site;

NightCafe allows you to create video content based on given prompts, but each such request will consume your tokens. Tokens are an analogue of the currency needed to work with the service. You can get them by actively participating in the life of the NightCafe community;

The DeepNostalgia service allows you to animate photos and other static images that contain faces. You will need to download the MyHeritage app to use this tool.

Read also: To be afraid of neurons is not to go into design. What is behind the new creative trend?

Concerns and perspectives

If we imagine that in a few years technology will allow us to easily animate any photos or even create video content from scratch, such prospects are stunning. Nothing will hold back our imaginations anymore, leading to a boom in young creators, right? We will probably find out the answer to this question very soon, but not everyone shares the optimism.

Many creators are already worried that their professions will become irrelevant in the near future. Artificial intelligence will create not only illustrations, but also animation, special effects, music and more. However, for now, let’s make a bold assumption that the value of human imagination and abstract thinking will not be leveled by neural network tools.

Indeed, there is a high probability that you will become a more valuable specialist in your field if you master neural networks in time. So, make friends with them now, because progress, as you know, cannot be stopped.

Читайте також: Що ШІ думає про майбутнє дизайнерів. Розмова зі штучним інтелектом у Chat GPT